Comments

You can use your Mastodon account to reply to this post.

Back in 2019 I built a small storage server for my home needs. Basically I store my music, movies, ebooks, and image collections here, enabling the use of DLNA to watch them on my TVs throughout the house.

Just the other day, I finally got the notice that I am running low on space. To be clear that threshold is any remaining space under 1TB. As of this writing I am down to 1020.7GB of storage space left on this single server. Time to plan for expanding my storage!

To move forward it is always best to know where you are starting.

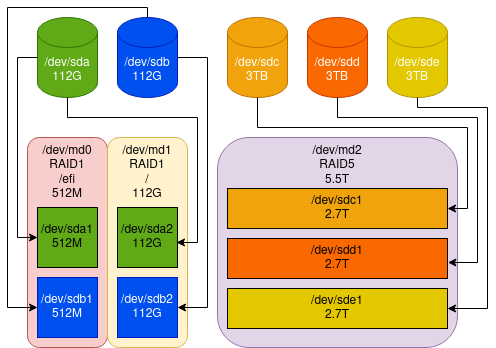

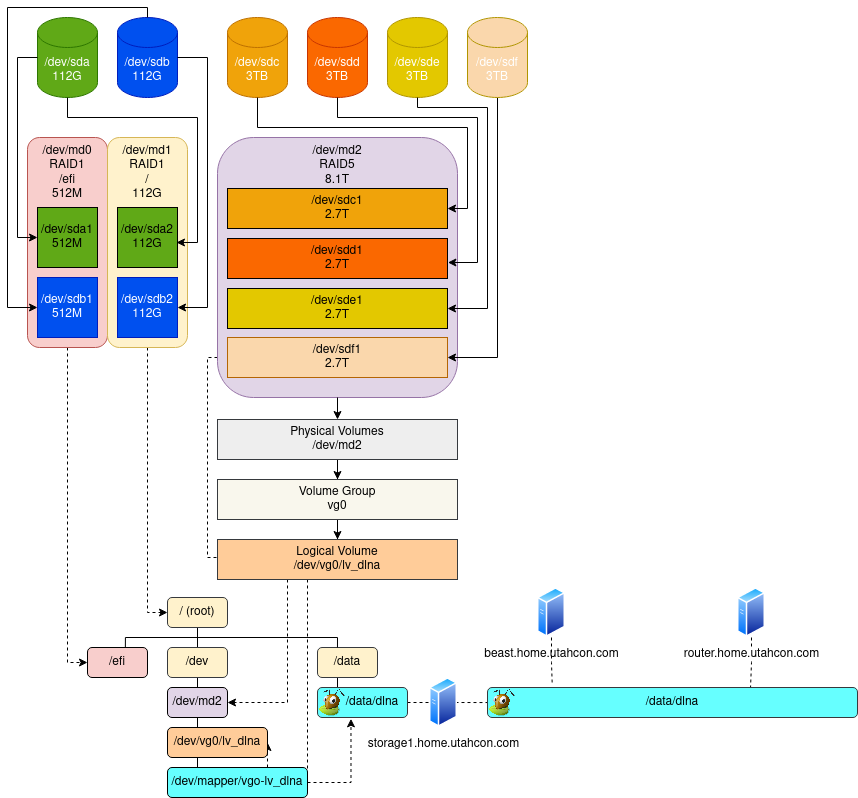

My storage server currently has 5 drives.

/dev/sda and /dev/sdb are 112GB SSDs from Kingston, connected via SATA 6.0Gbps/dev/sdc, /dev/sdd, and /dev/sde are 3TB 3.5" 7200RPM drives from Hitachi, connected via SATA 3.0Gbps

/dev/sda and /dev/sdb are both partitioned with a 512MB boot (/dev/sda1, /dev/sdb1), and the remaining for

storage (/dev/sda2, /dev/sdb2)./dev/sda1 and /dev/sdb1 are arranged into a RAID-1 (data mirroring; no parity, no striping) known as /dev/md0/dev/sda2 and /dev/sdb2 are arrnaged into a RAID-1 (data mirroring; no partiy, no striping) known as /dev/md1/dev/sdc, /dev/sdd, and /dev/sde each get a single partition and are arranged into a RAID-5 (block-level striping

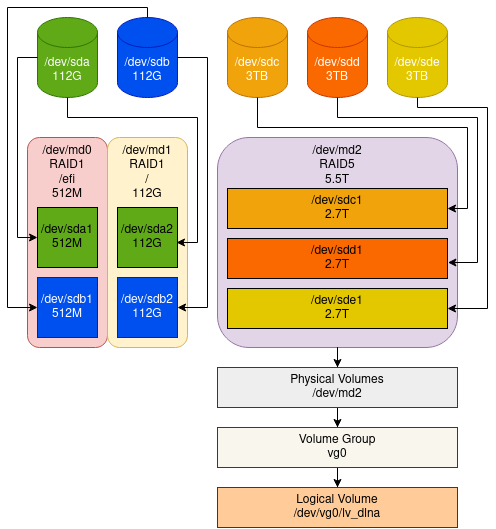

with distributed parity), allowing up to single drive failure, known as /dev/md2I expected to want to grow my DLNA library storage space over time, as such I implemented the following LVM configuration.

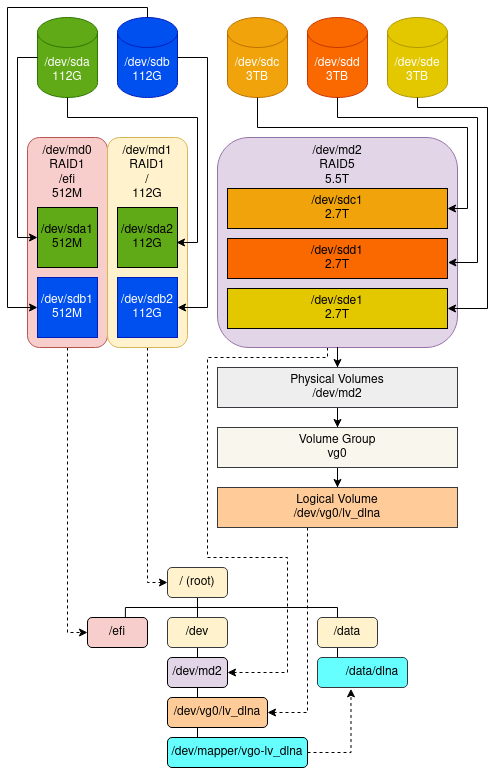

/dev/md2 was added as a Physical Volumevg0 was created as a Volume Group/dev/vg0/lv_dlna was created as a Logical VolumeAll this work results in a filesystems that resembles the following:

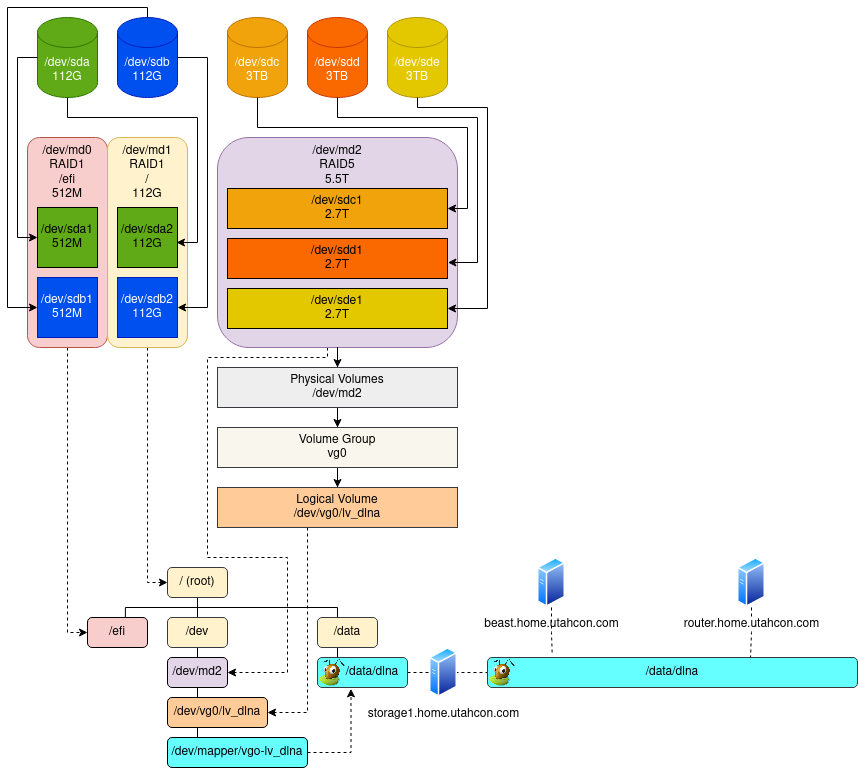

/dev/md0 is mounted at /efi for UEFI booting/dev/md1 is mounted at / (root) for storage of the rest of the OS/dev/vg0/lv_dlna is mounted at /data/dlnaNow I could have just made the LVM mounted at /data/dlna available across the network via NFS, but historically it has

proven to be painful to manage, and drops often enough to really just irk me. Most importantly, it has issues with

multiple writes. To solve all these problems, I implemented GlusterFS.

I simply added /data/dlna as a brick in GlusterFS, and now I have a network filesystem that I can mount on my personal

workstation beast as well as other endpoints. As of now, this is not exposed to the internet at large, but there are

options I have considered for doing exactly that, but that is for another day.

There are lots of options for adding more storage to this scenario, and I would love to explore each of them, alas time and money come to the rescue. Whose rescue? Mine, my wife will kill me if I go too overboard. So what are the real options available to me?

All of these options are actually really easy to implement, and each comes with its own pros and cons (noted above).

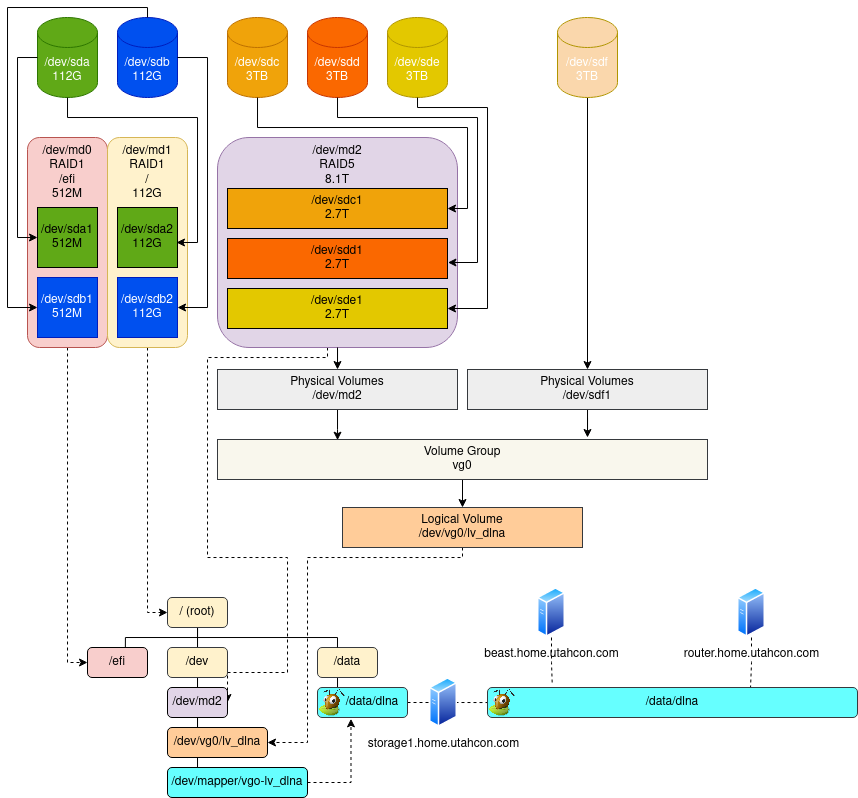

The first option is to add a drive to my RAID-5 at /dev/md2. Unfortunately I have used all the onboard SATA

connections, and so I will have to utilize a PCI slot. Looking at Amazon and Newegg, a 4-port 6Gbps SATA 3.0 card is

going to run me $30-100. I don’t specifically need hardware RAID support, as I am doing my RAID at the software level

(right or wrong).

I will need a new drive as well, that is another $45 for 3TB. This will boost my overall storage to ~8TB immediately, and assuming I keep popping 3TB drives in, eventually ~18TB can be had through this design. This is then expandable by adding more PCI cards, and more drives.

All-in-all this is looking like a simple $100 to add ~3TB of space with plenty of growth space.

The next option identified is to expand via LVM. The advantage here is I don’t have to touch the existing

RAID-5 at /dev/md2

Again, I have used all the available SATA connections on the motherboard, so I am going to have to get a PCI expansion card for adding more drives. We’ll select the same card, and forego the above addition to the RAID. Instead, we will partition and format this drive as follows for LVM.

The biggest downside to this option is unless I am adding 3 drives, and another RAID configuration, I am not going to have parity or striping on this drive, and it becomes a single drive failure. As a result, I am going to skip this option.

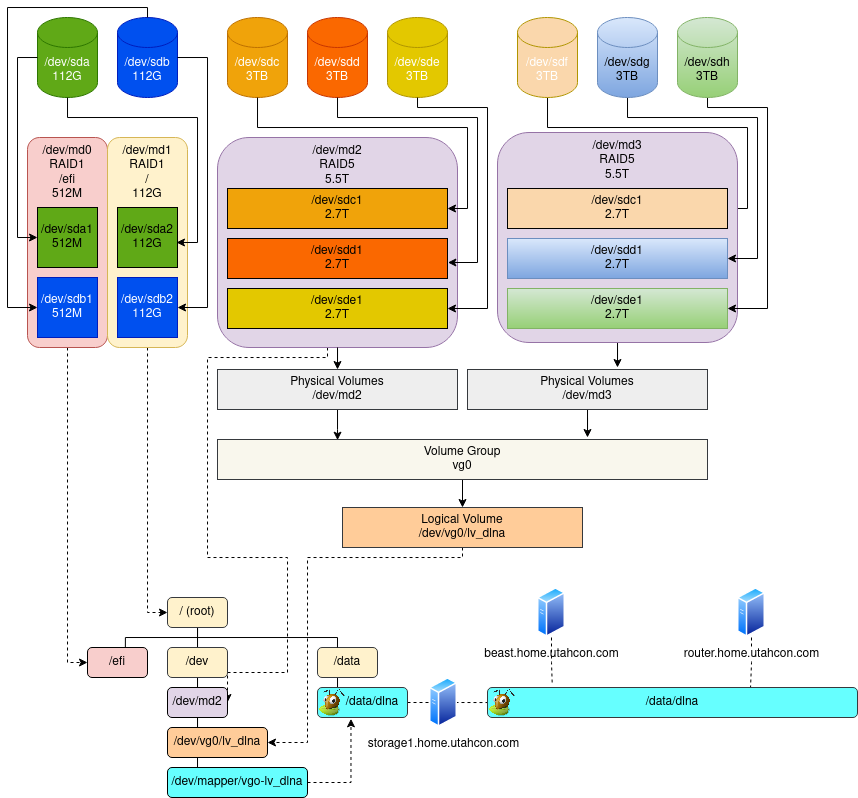

The third option I have decided to consider is adding a new PCI card, at least 3 new disks, and creating a new RAID, and expand our LVM.

Again, this option requires 2 additional disks be added to the configuration. This causes the cost of this to increase

about another $100. Another caveat here is the convenience of not touching /dev/md2 is we lose capacity by creating

overhead for another RAID configuration. So we are going to pass on this option as well, at this time.

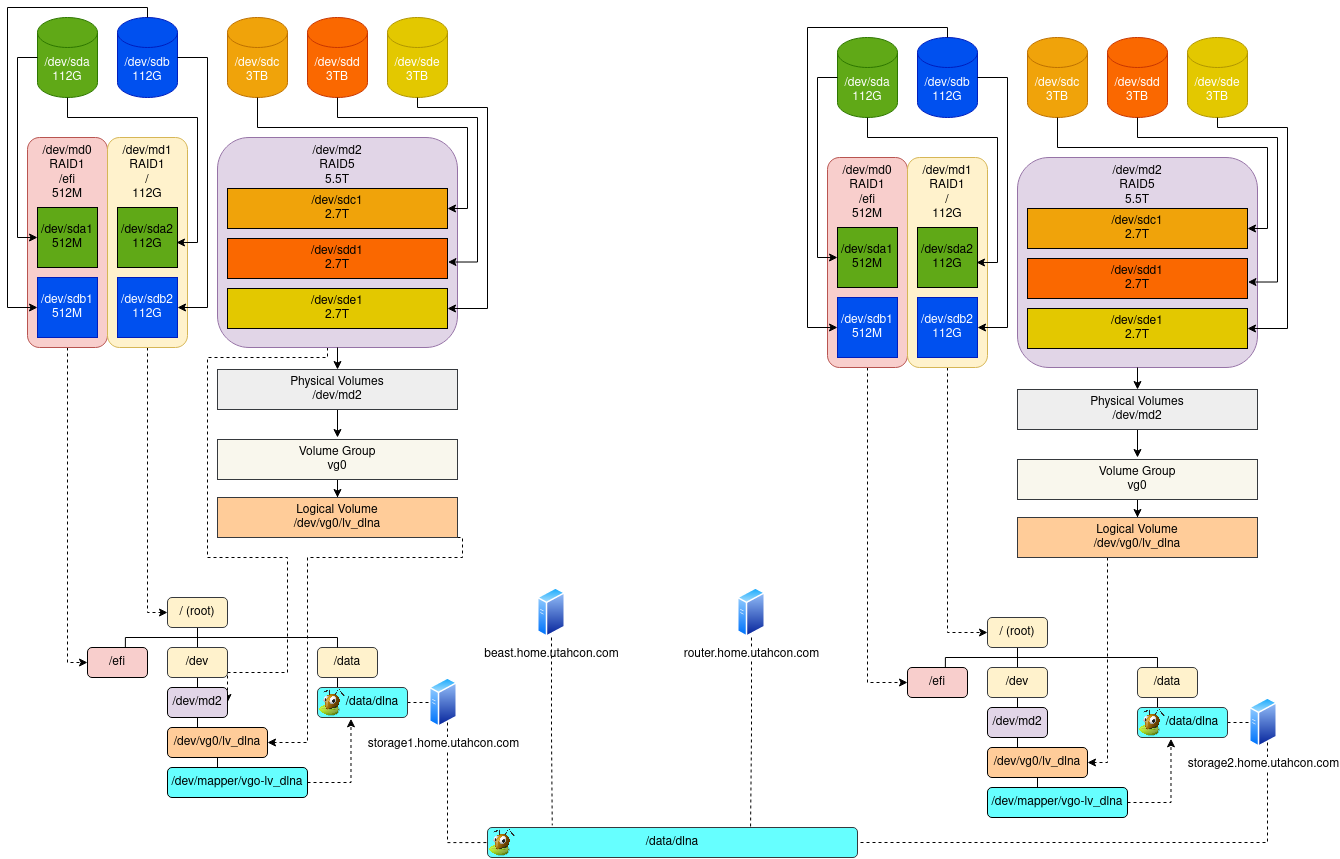

While the complexity of adding a server, and thus a GlusterFS brick is not all that high, the only real benefit I get from this approach is that my original storage never goes offline.

To build this second storage server I would duplicate the first. I would get a desktop chasis from my local university surplus ($50), get 2x 120G SSDs ($20/ea), and 3x 3TB HDDs ($45/ea). This would put me at about $225-250 for a second server. All-in-all not terrible for adding 100% more storage.

Having weighed all these options I think I am going to go with option 1 Add Space to Existing RAID. The cost is about

$100, and will give me room to grow not just one more disk, but up to four more, for a total of 7 drives. For added

benefit I am going to add the 5th disk, and simultaneously migrate from RAID5 to RAID6. This will cost me overhead, but

it will buy me and addition drive failure. I will report back when the work is done.